Uncertainty in Geospatial Analyses

The collection and analysis of environmental data, as well as site management decisions made based on these data, are subject to uncertainty. The most obvious source of uncertainty in geospatial analysis results from the inability to sample everywhere, which means that a range of potential values exist at unsampled locations. Geospatial methods differ in their ability to provide quantitative measures of uncertainty for predictions at unsampled locations. This section provides an overview of the sources of uncertainty resulting from sampling bias and error and from differences in analytical precision. Information about uncertainty from the use of different geospatial methods is presented in Evaluate Geospatial Method Accuracy. The sources of uncertainty should be identified and documented; to the extent possible, the effects of uncertainty should be minimized.

Sampling Bias and Error

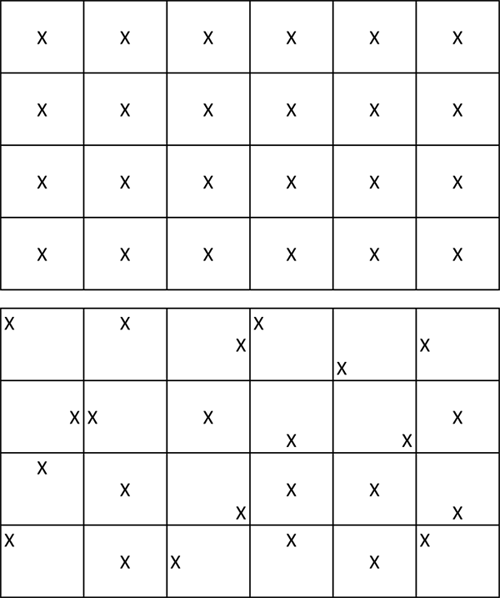

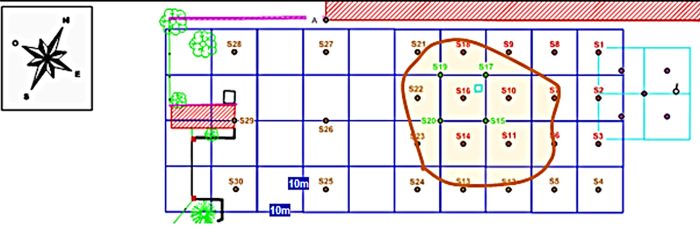

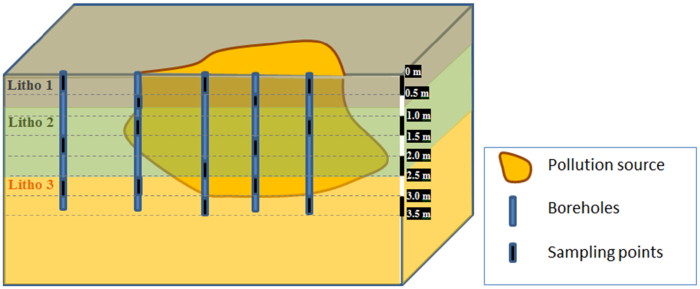

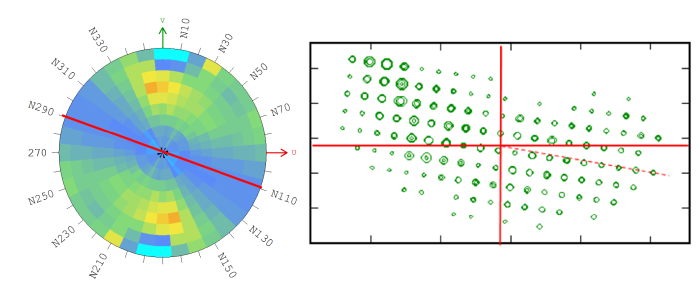

The quality of the results of any geospatial method depends on the quality of the input data. Often, only one sample is collected at each borehole and geological layer, which may introduce bias since the collected sample is assumed to be representative of the entire stratigraphic unit that is being characterized. When sampling for lateral delineation, systematic grid-based sampling may be used for the initial site characterization. This type of sampling, however, may include some bias. Figure 11 includes an example of results from systematic grid-based sampling.

Figure 11. Example of systematic sampling on a 25mx25m grid (left) and possible anisotropy detected along the sampling direction (right). True anisotropy or sampling effect?

Analytical Precision

Uncertainty is present in the analytical results obtained from laboratories and should be clearly identified. Similarly, if field-based measurement technologies are used, the accuracy of each device must be identified. In addition, the analysis carried out in the laboratory is based on a small fraction of the total sample collected, which is a minimal part of the geological unit being characterized. This result is then assumed to be representative of the whole geological unit. This bias should be taken into account when interpreting the final results.